Building a Synthesizer Editor with JavaScript, Part 1

In this series, we're going to do a deep dive into designing a MIDI-based editor for a hardware synthesizer: the KORG minilogue xd. We'll be using Max, and especially the js object, to accomplish this.

Who is this series for?

Don't worry if you're not a Max expert, most of the work we'll be doing isn't going to be in Max at all! And the Max objects we do use will be a good introduction or refresher for how to work with MIDI data, and how to build simple patchers in Max.

Although we're going to be looking at a lot of (simple) JavaScript code, this series won't be a tutorial in JavaScript programming. I'll explain the code as we go along, but I won't be explaining basic language features. If you're familiar with any text-based programming language, JavaScript will probably look familiar enough to you to follow along. And if you're inexperienced with text-based programming, JavaScript is a great language to get started with!

Finally, do you need to own a KORG minilogue xd? Well, sort of. If you want to actually use the scripts we'll develop to control some hardware, then yes (and if you have a KORG prologue (the big brother of the minilogue), the two synthesizer engines are similar enough that you can probably quickly adapt these scripts for your hardware). But even if you don't have access to this specific hardware, the concepts we'll be investigating are applicable to any MIDI-capable instrument (even plug-ins!), and could be worth your time.

MIDI in 2020

Even in Max's earliest versions in the 80s, one primary function was remote control of external hardware using the then-new Musical Instrument Digital Interface (MIDI) protocol. As Max has grown, MIDI support has remained and, over time, been extended and improved. Over the past several years, we've made a bunch of improvements to Max's MIDI functionality, adding support for MIDI Polyphonic Expression (MPE), (Non-)Registered Parameter Numbers (RPNs and NRPNs), 14-bit continuous controllers, to name some highlights.

Although music hardware has changed significantly in that last nearly 40 years, MIDI has remained and remained relevant. Most music production gear (of the non-control-voltage variety) produced today supports MIDI, either via the old-fashioned DIN-5 cables, or via USB, or even via minijack cables. The actual MIDI protocol hasn't changed much since the music industry accepted version 1.0 in 1983, although there have been extensions like General MIDI, MIDI Time Code and MIDI Machine Control, and more recently, MPE, which permits high-resolution control of individual notes (for many parameters which were historically global).

MIDI 2.0 was ratified only this year, and we expect MIDI 2.0 hardware and software implementations to start appearing before too long.

Why do I need an editor for my synthesizer?

You don't, of course. Most well-designed instruments work perfectly fine without being attached to a computer editor, and allow access to all of their features via buttons, knobs and a display. That was true of the venerable Yamaha DX7 in 1983, and remains true for a parameter behemoth like Dave Smith Instruments Prophet 12 or the Novation Peak. But an external editor offers a few advantages:

Most obviously, if you're working at the computer, sometimes it's comfortable to stay at the computer. If your synths are across the room, being able to tweak some values without standing up can be a time- and focus-saver.

You can see all of the parameters at once.

Parameter randomization

You can more easily save and restore the state of the synth (if you don't want to store to the internal memory of your synthesizer), which, depending on what you're up to, may make your work more portable.

Why the KORG minilogue xd?

I chose the KORG for a simple reason: I have one, and I hadn't built an editor for it yet. But that argument aside, the minilogue xd is a (relatively) affordable, compact and feature-rich synthesizer which happens to sound fantastic, and can be found in a lot of home studios for those reasons. Most importantly, it has excellent MIDI support and documentation.

A quick technical excursion: the KORG minilogue xd is a 3-oscillator hybrid synthesizer. The first 2 oscillators are VCOs (voltage-controlled oscillators), fully analog (and they will go out of tune to prove it). The third oscillator is digital, and, in addition to generating noise and digitally-generated basic waveforms, it can load user programs to become, for instance, a wavetable oscillator, an FM or additive oscillator or whatever any number of third-party developers have come up with.

The rest of the signal flow, including the filter, is analog, up until the effects section, which is again DSP-based. Like the digital oscillator, you can load external modulation, delay and reverb effects to complement the generous built-in set.

To further rationalize my choice: in my opinion, the minilogue xd falls into the Goldilocks zone of size, cost and parameters. It's got enough parameters to make it interesting to control, but not so many that we can't fit all the control elements into a single Max patcher window.

OK, with all that as prologue (get it?), let's get to work!

First do it fast, then do it right

Let's make a quick and dirty continuous controller generator in JavaScript. This isn't nearly as nice or useful as our final version will be, but it's a good way to get our feet wet and ensure that everything is working properly. The idea is simply to route a parameter name from Max to generate a valid continuous controller message for the associated parameter.

Let's start with the script. Here it is in its entirely:

autowatch = 1;outlets = 1;

var cctls = [

{ name: "VCO 1 PITCH", cc: 34 },

{ name: "VCO 1 LEVEL", cc: 39 }];

function getRandomInt(min, max) {

return Math.floor(Math.random() * (max - min + 1)) + min;

}

getRandomInt.local = 1;

function anything(val) {

var name = messagename;

for (var c in cctls) {

var param = cctls[c];

if (param.name === name) {

if (val === undefined) {

val = getRandomInt(0, 127);

}

outlet(0, val, param.cc);

break;

}

}

}

This is a little more verbose than necessary because it adds some randomization in case you don't provide a value argument, but as you can see, it's pretty concise. At the top, after we set up the 'js' object's outlet, we define an Array named 'cctls', which contains two Objects representing CC parameters of the minilogue xd. In JavaScript, Array objects are indicated by square brackets ( [ ] ), and Objects are indicated by curly braces ( { } ), so we've made an Array of Objects. Each Object can have named properties, similar to a dictionary in Max.

For the time being, we're keeping track of a name (property 'name') and a CC# (property 'cc'). We use the name to look up a parameter when a message is received from Max, and we use the the CC# to modify the associated parameter on the instrument. In the anything() function, we search for a parameter based on the message's name. If we find a match, we send a value -- either the value sent in from Max, or a randomly generated value if there was no argument -- as a message from the 'js' object's outlet, formatted for the 'ctlout' object in Max.

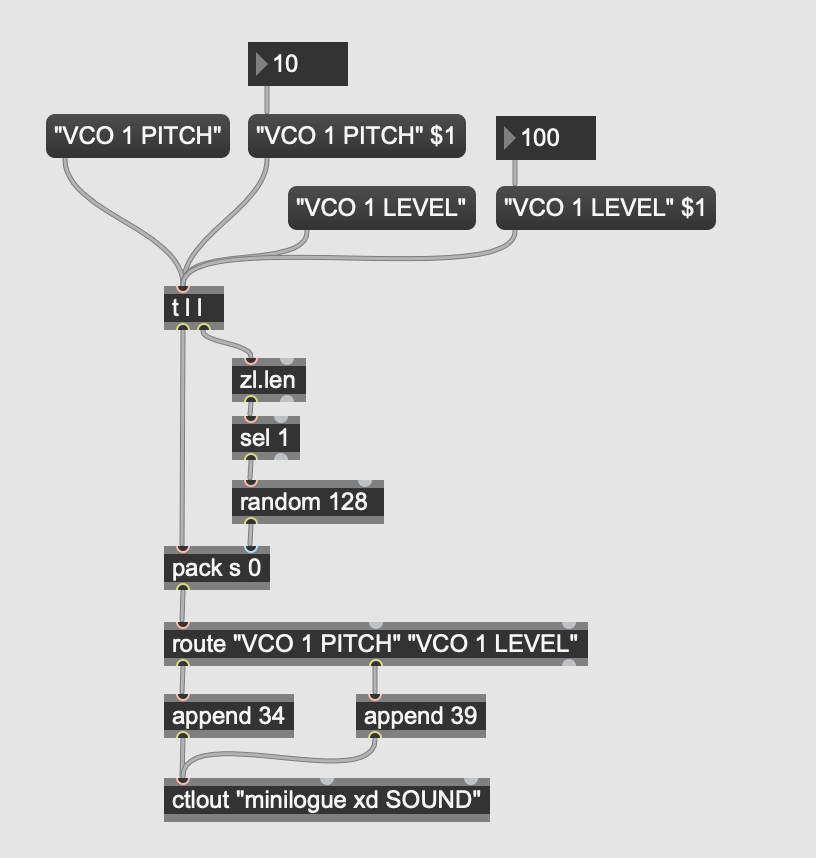

And here's the patcher:

Look how neat and clean that is! For comparison purposes, here's a good-faith effort to make the same patcher without using the 'js' object.

That's not so bad, but it should be clear that once we're managing more than a few parameters, maintaining this logic is going to get tedious, especially if the parameters have different ranges. Encapsulating this kind of functionality inside the 'js' object is a great way to clean up your Max patchers.

Give it a try (the patcher and the script are in the archive attached to this article). If your minilogue xd isn't responding, ensure that you haven't disabled "MIDI Rx CC" in the Global section of the Edit Mode (it's behind the 5th illuminated button when you're in Global Edit mode).

Modeling Synth Parameters in JavaScript

I have a little more to say before we push ahead. You might be wondering: why are we doing this in JavaScript at all? Isn't Max capable of doing everything we need to do? Sort of. We could build this in Max, but the patcher would be huge and difficult to debug, read and maintain. Here, for instance, are the guts of a (small part of a) Yamaha DX7 editor I built in Max before I knew better:

Any good programmer has the essential virtue of laziness, and building our editor in JavaScript is going to help us avoid doing a ton of repetitive and error-prone routing of data to and from our editor's interface (more on that later!).

Which brings us to modeling. Our goal, as in our first example, is to use JavaScript to manage the communication between our patcher and our instrument. As above, we'll create a table containing all of the parameters in a synth voice patch, with properties for the name and CC (or NRPN) index. Eventually, we'll be adding information about the parameter's range, a current value, maybe a default value. That is, we're going to create a model of the voice, its properties and its state using JavaScript.

When we change a parameter value from Max, we'll update the model and then send the change to the instrument. And when we change a parameter value at the instrument, we'll update the model and then send the change back into Max (to keep a user interface in sync with the state of the instrument, for instance).

For the geeks: this type of programming is called Model-View-Controller programming. I described the Model above, and the View is just the user interface (yes, our editor will have a user interface). The Controller is the part which handles communication between Max (or the minilogue xd) and the Model, and it will also live in JavaScript.

Until recently, implementing this type of programming structure in Max was somewhat difficult, but there have been some recent additions to the 'js' object which make this much easier (and which we'll discuss in Part 2 of this series).

Let's Get Started

The first thing we need to do is take a look at the MIDI implementation of our synth. At the time of this writing, it's at version 1.01 (2020.2.10) and available on KORG's support website (it's also included in the download accompanying this series). Here's an excerpt from the "Recognized Receive Data" table in that document:

This table describes the channel messages to which the minilogue xd will react. Let's find one of the parameters we mapped in the first example: VCO 1 PITCH is described on line 396 in the document, and in the screenshot above.

What do we know about this parameter? We know its name ("VCO 1 PITCH"), we know that it's a CC message (the status byte is 0xBn, which is the hexadecimal indicator for a continuous controller message [the 'n' is the channel, so 0xB0 means CC on MIDI channel 0]). We know that it's CC 0x22 (decimal 34), and its value is apparently from 0-127 (the full range of a 7-bit CC parameter [that's from 0 to 27 - 1, if you're curious where those numbers come from]). We can modify our script above to add properties for min and max, for instance:

var cctls = [ { name: "VCO 1 PITCH", cc: 34, min: 0, max: 127 }, .

.

. ];

On the right hand side of the documentation page, there are a bunch of footnotes. These add some additional information about the parameters, for instance if they have an extended range or some kind of enumerated value. In this case, we care about footnotes 5-2 and 5-4.

Note 5-2 is critical, but we already checked and confirmed it above:

*5-2 : This message is recognized when the "MIDI Rx CC" is set to "On"

But what about Note 5-4?:

*5-4 : When a 10 bit value is received the lower 3 bits are first expected via a CC #63(0x3f) message.

That's important! This parameter is actually a 10-bit value, which means that its range is from 0-1023 (that's 210 - 1). If you edit the parameter on the instrument, you can confirm that -- the values available for pitch are much more granular than 128 steps. Let's modify our model:

var cctls = [

{ name: "VCO 1 PITCH", cc: 34, min: 0, max: 127 },

.

.

.

];

If you're familiar with MIDI, you know that continuous controller messages are restricted to the 7-bit value range of 0-127. According to Note 5-4, in order to use the full 10-bit range of this parameter, we need to send the lower three bits to a different CC (CC#63) first, and then pass the upper seven bits to the "real" CC (34). How do we do that in JavaScript? Like this:

if (param.max > 127) { var bit3 = val & 7; var bit7 = val >> 3; outlet(0, bit3, 63); outlet(0, bit7, param.cc);}else { outlet(0, val, param.cc);}In the above, we first get the 3 lower bits by ANDing (&) the value with 7 (written as a binary number, 7 is 111, so '& 7' gives us only the parts of the value contained in the 3 lower bits). Then we shift the upper 7 bits over by 3 bits (>> 3), deleting the lower 3 bits since we don't need them anymore (they are stored in 'bit3'). That gives us a 7-bit number (10 - 3 = 7) which we store in 'bit7'. Finally, we output 'bit3' to CC#63, and 'bit7' to the parameter's CC, as described above. Got it?

Just in case that wasn't clear, here's some ASCII art:

abcdefghij // 10-bit value

abcdefghij & 7 = hij // lower 3 bits

abcdefghij >> 3 = abcdefg // upper 7 bitsSlurpin' the NRPNs

As I mentioned above, MIDI continuous controller messages are limited to values from 0-127. And there are only 128 CC numbers (also from 0-127, and even then, some of them are reserved for special duties). That would mean that MIDI instruments can only support a bit fewer than 128 parameters with the fairly coarse resolution of 128 steps. And although many MIDI instruments manage with those restrictions, MIDI provides another, more flexible mechanism for parameters called (Non-)Registered Parameter Numbers.

The RPNs are, as the name implies, registered for specific tasks and aren't used very often. The NRPNs, though, are increasingly popular for instruments with lots of parameters, or those with high-resolution parameter values, or both. Manufacturers like Elektron, Dave Smith Instruments/Sequential, Novation and others use NRPNs generously for their instrument lines.

There are 16384 NRPN parameters (14 bits worth), each of which can transmit a 14-bit value (0-16383). And, in case you were wondering, recent versions of Max have special objects for handling NRPNs, 'nrpnin' and 'nrpnout'.

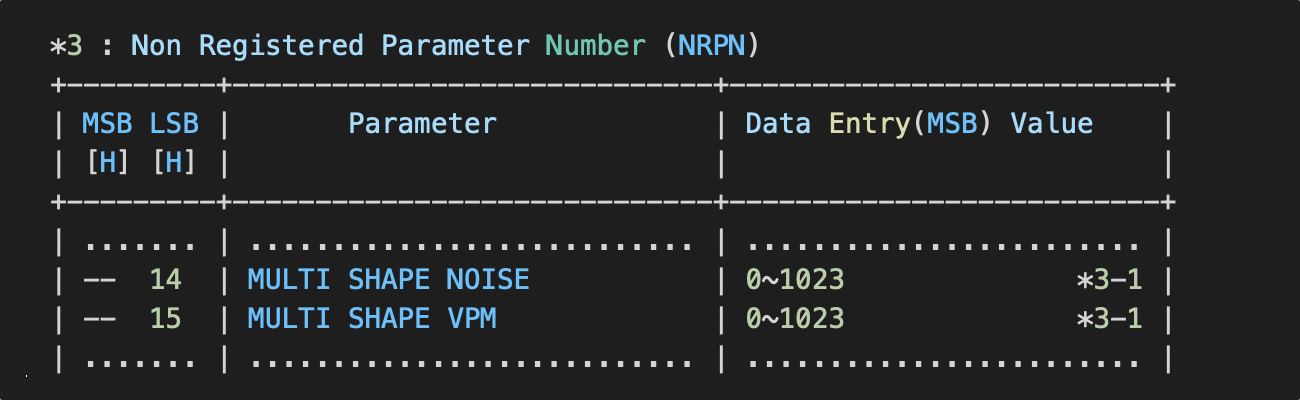

Most of the critical parameters of the minilogue xd are implemented as CCs, but there are several which use NRPNS. You can find them in Table 3 of the MIDI implementation document. For instance, the Multi Engine's Shape parameter can be controlled this way. So let's extend our minimal model to accommodate them. This:

becomes:

var nrpns = [

{ name: "MULTI SHAPE NOISE", nrpn: 0x14, min: 0, max: 1023 },

{ name: "MULTI SHAPE VPM", nrpn: 0x15, min: 0, max: 1023 }

];

We could just add the NRPNs to our original list of parameters, but since I know something about the future of this project, I've decided to keep them separate. This will allow us to perform special processing for NRPN parameters which isn't necessary for CC parameters and vice versa. More on that in the next installment.

Our processing code has gotten a little longer, but it's still simple:

function getParamForName(name)

{

for (var c in cctls) {

var param = cctls[c];

if (param.name === name) {

return param;

}

}

for (var n in nrpns) {

var param = nrpns[n];

if (param.name === name) {

return param;

}

}

return null;

}

getParamForName.local = 1;

function anything(val) {

var name = messagename;

var param = getParamForName(name);

if (param) {

if (val === undefined) {

val = getRandomInt(param.min, param.max);

}

var whichOutlet = param.nrpn ? 0 : 1;

var whichIndex = param.nrpn ? param.nrpn : param.cc;

if (param.max > 127) {

var bit3 = val & 7;

var bit7 = val >> 3;

outlet(1, bit3, 63); // always CC #63 for extra bits

outlet(whichOutlet, bit7, whichIndex);

}

else {

outlet(whichOutlet, val, whichIndex);

}

}

}

The function getParamForName() searches both Arrays (CC and NRPN) and returns a parameter Object if found. Assuming that one is found, we determine which outlet, and which parameter number we want to use, depending on whether we're working with an NRPN or not. We can determine that by checking whether the parameter has an 'nrpn' property. We'll use outlet 0 (the leftmost outlet) for NRPN messages, and outlet 1 for CC messages.

The KORG NRPN implementation is a little unusual (the more you use MIDI, the more you'll discover that "unusual" implementations are not that unusual). It uses low-resolution NRPNs (a 7-bit value) in combination with our old friend CC#63 for transmitting 7-, 8-, 10- and 14-bit values. The footnotes to Table 3 in the MIDI Implementation document describe how to AND and bitshift the values to make this work.

For the moment, we're only modeling 10-bit values, and we can use the same code we wrote for 10-bit CC parameters. The 3 lower bits go to CC#63, the 7 higher bits to the NRPNs index using the low-resolution NRPN syntax.

Our patcher hasn't gotten much bigger, and that mostly because of the extra parameter messages:

One quick remark: the parameters I've chosen to illustrate NRPNs are particularly interesting because they are going to cause us some problems moving forward! When you turn the Shape knob on the instrument panel and observe the output using something like Midi Monitor (https://www.snoize.com/MIDIMonitor/) on macOS or MIDI-OX on Windows, you'll see that the minilogue xd sends CC#54, not one of these NRPNs.

However, when sending a complete voice patch to the minilogue xd, we need to know the individual values of Shape for the Noise, VPM and User sections of the Multi Engine oscillator. So we'll need to figure out how to update the state of the Shape parameter(s) properly when receiving the CC parameter change from the instrument. We'll encounter this problem more acutely and Part 2, and finally solve it in Part 3 (hint: it involves MIDI System Exclusive messages).

Take this version for a spin, and then we'll start wrapping up.

Going full circle

The last thing we want to do is listen to the instrument and update our model when changes arrive. For the moment, we're just going to post a message to the Max window -- after all, we don't have a real UI/View yet.

I mentioned above that the KORG NRPN implementation is a little unusual. If you look in the example patcher (minilogueTheFourth.maxpat), you'll see a little 'js fixKorgNrpn.js' object between the 'midiin' and 'nrpnin' objects. This little script "standardizes" the non-standard messages KORG is sending so that the 'nrpnin' object can parse them. In Max 8.1.9 and newer, there's a new @permissive mode to 'nrpnin' to permit this non-standard input.

Because of the problem described above with the transmission of the Shape parameter(s), I've added an NRPN parameter for the Voice Mode so that we can verify reception of NRPN parameters, too:

var nrpns = [

{ name: "VOICE MODE TYPE", nrpn: 0x10, min: 0, max: 4,

enum: [ "ARP LATCH", "ARP", "CHORD", "UNISON", "POLY" ] },

...

];

The Voice Mode Type parameter is a little different than the parameters we've seen so far. It's an enumeration (the values are provided in Note 3-5 of the MIDI Implementation document). We can use the enumerated values to map values to a string for display, as you'll see below.

Here are the updated portions of our script:

function getParamForIndex(index, isNrpn) {

if (isNrpn) {

for (var n in nrpns) {

var param = nrpns[n];

if (param.nrpn === index) {

return param;

}

}

}

else {

for (var c in cctls) {

var param = cctls[c];

if (param.cc === index) {

return param;

}

}

}

return null;

}

getParamForIndex.local = 1;

var lastLowBits = -1;

function incoming(val, index)

{

var isNrpn = (inlet == 0); // message in left inlet

var isCc = !isNrpn; // the opposite of isNrpn (NOT left inlet)

if (isCc && index == 63) { // CC#63: low bits of values > 127

lastLowBits = val; // cache the low bits

return;

}

var param = getParamForIndex(index, isNrpn);

if (param) {

if (lastLowBits !== -1) {

val = (val << 3) | lastLowBits;

}

// store the value in the parameter, we'll use it later

param.value = val;

post("Received value " + val + " for " + param.name);

if (isNrpn) {

post("( NRPN " + index);

}

else {

post("( CC " + index);

}

if (param.enum) {

post(": " + param.enum[val]);

}

post(")\n");

}

lastLowBits = -1;

}

The function incoming() is used to process messages sent from the instrument.

The first thing it does is check for a message on CC#63. As we've seen, this special CC# is used for transmitting the low bits of a value greater than 127. If a CC#63 message comes, we cache the value in the global variable lastLowBits and return immediately.

If any other message arrives, the script attempts to resolve the CC# or NRPN# to a parameter via getParamForIndex() (we send NRPN messages into the left inlet and CC messages into the right inlet, so that the script can easily tell the difference). getParamForIndex() does approximately the same work as getParamForName() did, but it matches the type and index of the message, rather than the name. We need to know whether the parameter is a CC or NRPN because the indices aren't necessarily unique -- you could have both a CC#16 and an NRPN#16 -- so we need to tell getParamForIndex() where to look.

If the parameter is found, we check for a valid value in lastLowBits (>= 0) and combine it with the value passed to incoming(). We first shift the value 3 bits to the left and then OR the low bits in. Some more ASCII art to illustrate that process, in case you're new to bitwise operations.

hij // lastLowBits

abcdefg // current value

abcdefg << 3 = abcdefg000 // value << 3

abcdefg000 | hij = abcdefghij // value | lastLowBitsBecause we know that all values > 127 are 10-bit values at the moment, this is straightforward. When we extend the Model to include 8- and 14-bit parameters, this function will get more complicated. Also note that we're storing the received value as a property of the parameter ('value'). We don't need it quite yet, but we will in the next installments of this series.

Finally, we post all of the information to the Max window (with an enumerated name, if available). At the very, very end, we invalidate the lastLowBits and are prepared to receive new messages.

If you try this out, you'll see that changing the OSC 1 Pitch or Level, or the Voice Mode on the minilogue xd will now cause the script to respond by posting the new value to the Max window.

So Far, So Good

Let's call it a day. We've covered a lot of ground in this first article, and hopefully you've got a better understanding of how JavaScript can be used to handle the modeling of the parameter space of an instrument. Our Model is still pretty simple, but as we saw above with enumerated parameters, extending the Model is straightforward. We've still got some work to do, though -- we're about halfway toward having something working and about a third of the way toward having something I'd consider pretty good.

Linked to this article, you'll find all of the versions of the patcher and associated code we worked on. Additionally, there's a somewhat more complex version of this project in which all parameters (both CC and NRPN) have been modeled, including the non-10-bit NRPNs and enumerated parameters.

In the extended project, you can also send a bang to the 'js' object to perform a full randomization of your minilogue xd, so that version has some immediate utility, and might provide some incentive to dig into the code while you're waiting for Part 2.

In Part 2, in which we'll take a closer look at some of the code in the extended version, using it as the basis for the further development of our Model-View-Controller program to encompass a View.

In fact, we'll use our Model to create the View! Stay tuned.

For Further Study and Inspiration

While you’re working your way through this tutorial series, you may find Guillaume DuPont’s amazing Max for Live editor for the Oberheim Matrix-1000 to be a source of inspirations and good ideas. I'll let Guillaume say a bit about the project himself:

A friend of mine who lent me his Matrix-1000 for a couple of weeks. He bought this nice piece of gear for an album project and he wanted to create his own sounds. He also bought a dedicated hardware controller, but it turned out not to be very easy to use... So I suggested to him to build a dedicated software MIDI editor which he could use directly in Ableton Live might be a good solution. It was just before the first lockdown, and I wanted to start a project or something that let me spend my time intelligently.

I had used Max a little bit before - the basics, really - so I decided it was time to learn Max in depth and from scratch, which I did with the help of the great tutorials found on the Cycling '74 website, the online documentation and with great help from Jeremy himself.

The Matrix-1000 Editor is finished and operational. Jeremy and I have done some conclusive tests, and the device seems to be stable and usable with Ableton Live 10 and Max 8. I've decided to share this device freely with the Max/Max for Live community.

Learn More: See all the articles in this series

by Jeremy on January 6, 2021